Security teams have growing questions about generative AI, as it is being woven into many software-as-a-service (SaaS) solutions and cloud platforms at a feverish pace.

Despite AI's power to enhance business productivity, leaders are weighing the cost of security risks associated with it. Securing sensitive data accessed by AI is naturally a high priority as well, and organizations are attempting to safely enable gen AI tools and create a long-term lifecycle plan.

Through multiple webinars, customer connections, CISO roundtables, and risk assessments conducted at Varonis, we help answer many frequently asked questions about AI and the security risks of deploying it in organizations. This blog is a culmination of the top questions and answers pulled from those engagements.

With answers to the top AI security questions, you'll be able to confidently make organizational decisions on AI and uncover specific plans and strategies to consider. Let's dive in.

General AI security questions

#1 What is AI security?

Depending on your definition of AI, AI security can have different meanings.

AI security can pertain to safeguarding information from AI tools like large language models (LLMs). Organizations look to manage what data is accessed, protect against misuse, and monitor for threats such as jailbreaking or data poisoning. A component of AI security in this vein can encompass the prevention of shadow AI.

AI security also includes using AI to improve an organization's security posture. Enabling AI-powered cybersecurity can help organizations combat cyberattacks and data breaches, enhance remediation efforts, and reduce time to detection.

As AI adoption has accelerated, organizations must have complete visibility and control over AI tools and workloads. This makes the case for AI security being a fundamental part of data security. Proper AI security helps organizations deploy tools securely and combats the risk of leaking sensitive information.

#2 Shouldn't we wait for AI to mature further before jumping into it?

Our world won’t go back to the days before AI, so if you’re not already thinking of how to incorporate it in your organization or how it works, you’ll be playing catch up in the long run.

Also, the other significant risk organizations are starting to face is shadow AI, where users download and use unsanctioned AI applications in the absence of an option provided to them by their central IT organization. Rampant downloads of DeepSeek is a recent example of this trend.

However, we don’t recommend enabling AI tools in your org without properly educating yourself on them first. It is important to understand all the benefits and risks and how some solutions use the data you’re putting into them to ensure you can properly secure critical information before deployment.

“In a lot of ways, AI and gen AI represent an opportunity for security to lead by example and actually show others how there is a benefit," said Matt Radolec, Varonis VP of Incident Response and Cloud Operations during a webinar with CISOs and other industry leaders on the topic. "There is ROI from using these tools and it can be done safely.”

#3 What resources are there for those getting started with their AI journey?

Every AI tool operates differently. Determine which tool you’re interested in learning about early on, and research how it uses your data to identify potential concerns.

Many companies start with the National Institute of Standards and Technology (NIST) Cybersecurity Framework (CSF) or the AI Risk Management Framework (RMF) to establish their plans and policies for implementing all AI or specific technologies. Both frameworks are useful for exploring all possible risks and giving your organization an outline to document an understanding.

We also recommend that you get a risk assessment of your current security posture as an essential first step. If the gen AI tool you hope to use relies on user permissions, for example, that is an area of concern for your data security.

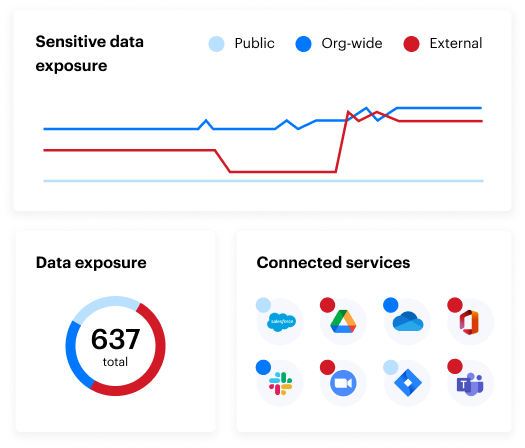

Varonis’ free Data Risk Assessment takes minutes to set up and delivers immediate value. In less than 24 hours, you’ll have a clear, risk-based view of the data that matters most and a clear path to automated data security.

AI security risk questions

During a recent customer event, a university employee shared that their institution desired to be at the forefront of developing tech and the benefits it can bring, but they aren’t sure how to validate their concerns.

This is the case for most teams. While 67% of organizations report increasing their investments in gen AI, only a fifth feel highly prepared for the risk management associated with it.

So, let’s answer some questions about those risks and how your org can combat them.

#4 How do you adopt AI and mitigate this risk?

When adopting AI, the best way to do it securely is to ensure visibility into who has access to sensitive data, how they are using that data, and who needs it to actually do their job.

If your organization has low visibility of your data security posture, Copilot for Microsoft 365 and other gen AI tools have the potential to leak sensitive information to employees they shouldn’t, or even worse, threat actors.

At Varonis, we created a live simulation that shows what simple prompts can easily expose your company’s sensitive data in Copilot. During this live demonstration, our industry experts also shared practical steps and strategies to ensure a secure Copilot rollout and show you how to automatically prevent data exposure at your organization.

#5 Who takes responsibility when AI is wrong?

It’s important to start with the definition of wrong. If we are discussing a disconnect between prompts and results, then the data and AI engineers are responsible. However, if ‘wrong’ equates to misinformation that leads to critical decision-making, the risk and governance boards are responsible.

Before implementing an AI solution, the NIST AI RMF suggests that organizations document risk mapping. This mapping would include information about the user base and how they will use AI. The risk map, for example, may be drastically different for a market research team using AI to determine the best approach to marketing a product versus a clinician using AI in discerning a medical diagnosis. In the latter scenario, the health organization may want to establish a more frequent and detailed review process of prompts and responses. Regardless, there should always be a human in the loop and layers of accountability.

The CISO and legal teams are ultimately responsible; however, there must be a clearly defined shared responsibility model.

#6 What's the risk of using data generated through AI that could be under copyright or a patent?

Humans should always be in the loop to verify that what AI shares with users is factual and copyright-free. In some cases, it is advantageous to use two separate models to validate and detect potential plagiarism or IP theft.

#7 How can I mitigate the risks of using gen AI without saying no to my team?

Involve interested parties early on and often in the risk and policy generation process. Leaders should maintain a "yes, but" approach when introducing a new AI application and fielding these requests.

You can greenlight the request and set clear timeline expectations while also asking the requestor to assist in the written justification, defining use cases, and volunteering for pilot or beta testing. A thoughtful and conscious "yes" does not equate to uncontrolled chaos.

Insights on large language models (LLMs)

#8 How can I ensure a prompt is sanitized correctly before being answered by an LLM?

Security leaders want to sanitize prompts for sensitive data and malicious intent.

Real-time keystroke monitoring and sanitization can be taxing in terms of compute power and latency and are financially costly. Monitoring prompts after entry is far more feasible, and security teams are finding that continuous monitoring and alerting have been effective in catching insider risks or malicious actors almost immediately upon their first risky prompt.

Some data loss prevention (DLP) solutions in the market can stop a user from providing or referencing a file that has a sensitivity label applied. Some of these features are in preview and require organizations to have robust labeling programs. If a file isn't labeled, then this type of sanitization will not prevent the content from being processed.

#9 How can I prevent a jailbreak in the LLM?

Similar to prompt sanitization, real-time monitoring and detection are key in preventing a jailbreak attempt from fully executing.

One of the key indicators of a jailbreak attempt is user behavior. Often, the attempt is conducted by a stale account, an account without typical access, a user with far less activity (e.g., 10 prompts executed in a five-minute period), etc. Additionally, advanced solutions are now detecting malicious intent and odd behavior analytics (e.g., prompting on a subject that is atypical to that user).

Lastly, prompt classification and detection can trigger alerts when a user prompts for certain keywords or content types to stop the jailbreak attempt midstream.

#10 Is it worth it to build your own LLM models considering the high cost of infrastructure and time? Or do you recommend going with a SaaS model?

Organizations are finding value in various ‘studio’ and ‘custom agent’ generators that do not break the bank. It is good to first explore these low-code options before creating a pro-code model.

This approach will give teams a greater sense of the quality of their existing data and clearer definitions of desired outcomes. Security leaders also benefit from scalable low-code AI agent generators because they can theoretically apply protections universally across any agent tested and deployed.

Questions on internal training for AI

#11 Should I provide gen AI training for employees on using AI tools?

AI training should be extended company wide — to the board, executive leadership, legal teams, etc.

Many vendors, such as Microsoft and Salesforce, provide free AI security resources, and every cybersecurity or IT conference provides multiple AI training options across the globe. Providing training is less of a requirement, but organizations should curate a set of required courses and sessions.

The organization’s personnel and partners receive AI risk management training to enable them to perform their duties and responsibilities consistent with related policies, procedures, and agreements.

NIST AI RMF, Govern 2.2

One-time training is not enough. Just as phishing simulation exercises are executed regularly, organizations need to create regular AI security awareness checkpoints with their users.

#12 Should gen AI tools be available to all in an org or restricted to a few?

You can certainly make the use case for everyone in your organization to have access to AI tools. Yet, most organizations are unable to genuinely consider enterprise-wide deployments because of lacking governance programs.

According to a recent Gartner study, 57% of organizations limited their Copilot for Microsoft 365 rollout in 2024 due to low-risk or trusted users and 40% delayed their rollout by three months or more. Thus, organizations are often left wondering whether or not they can make AI tools available instead of if they should.

Lagging behind competitors in AI adoption and consequently missing efficiencies or innovations can also create potential business risks. Organizations increase the risk of users adopting shadow AI without sanctioned and well-governed options provided to them.

#13 Should you allow approved and/or unmanaged AI solutions? What guidelines should you give your employees?

The NIST AI Risk Management Framework (RMF) has four functions: Govern, Map, Measure, and Manage. Elements of all four should be applied to sanctioned AI solutions and those requested by stakeholders to be approved and implemented.

Users should not be able to download, use, or build AI applications without the expressed understanding and consent of security and IT teams. Conversely, they should have efficient and easy processes to request reasonable access or privileges to AI.

As a starting point, users can provide foundational information in a simple questionnaire about the positive and beneficial uses of the proposed AI system, total users, data intended to be used by AI, and known costs and risks. Users and business stakeholders need to have shared responsibility and knowledge about AI use. If they cannot provide a rudimentary explanation of the potential benefits and associated risks, then the implementation is likely to fail. Failure can come in the form of wasted revenue and company resources, or AI abuse.

Organizations need to understand their non-negotiables for AI to determine what guidelines must be rolled out to employees. For example, you may determine that certain regulated data should never be used in or by AI (e.g. ITAR data or certain PII). Those terms can change as the organization matures their governance and management capabilities or business circumstances change. Users may initially be prohibited from accessing AI applications on their managed mobile device or from a web app; however, after time and further analysis, that may become acceptable use.

AI data security questions

#14 How important are data classification and DLP when using AI? Should that be in the initial steps?

Knowing what data or resources are being accessed by AI is foundational.

The organization will lack reliable governance over certain data types if data is misclassified or not classified. Non-HR users should not be able to prompt for HR-controlled data, for example, but it’s impossible to enforce AI access control if that data is not classified and managed in some way.

Organizations need to consider classification in a broader and long-term sense. Many organizations will eventually have more than one enterprise AI solution deployed in their environment, and this multi-AI model will tap into multiple data sources. Therefore, the policies and technologies implemented now to classify data should be extensible and continuous across multiple cloud resources. Having one solution for classifying data in Salesforce and another in Azure will not be suitable for the future of multi-AI adoption.

DLP is a key element of monitoring and managing AI per the NIST AI RMF; however, DLP is a downstream control that cannot be an initial step. Nevertheless, it can be an initial element to consider in the early planning and strategy stages.

#15 Can you highlight the importance of least privilege in an organization when rolling out Copilot?

Least privilege is paramount. Practically, automated least privilege is the most effective. Data is being created and changed at a dizzying pace that security and risk teams cannot manually configure least privilege policies to keep up. People and identities are also evolving at a similar rate.

Through automation, organizations can more realistically adapt their policies and access based on changes in data and identities. One resource historically may not have sensitive data, but through one action, it could be populated with information that requires greater control. Another instance of this dynamic is when a user or admin accidentally or maliciously changes privileges on a resource and causes ‘drift’ from initial configurations. Automated detection and remediation can adaptively find changes like these and address them without significant intervention.

Copilot for Microsoft 365 questions

#16 What are the first steps before deploying Copilot in your org?

Before deploying Microsoft 365 Copilot in your organization, it is helpful to establish an AI governance group or board. This group should include representatives from compliance, identity, security teams, and business leaders.

The governance group will be responsible for creating clear governance and usage policies for Copilot, ensuring that all stakeholders are aligned and that the deployment is well-coordinated. Additionally, organizations can evaluate the ideal data security platform to protect against runtime and data-at-rest risks.

Another essential step along these lines is improving the underlying information governance within the Microsoft 365 tenant. Organizations should focus on mitigating oversharing risks by collaborating with security and compliance teams to implement scalable and automated tooling to classify, protect, and manage content throughout its life cycle.

Lastly, developing best-practice guidance and training is useful in helping employees securely store and share information. Integrating this training with employee onboarding and mandatory security training will promote broader adoption and ensure that employees are well-prepared to use Copilot or other AI solutions responsibly.

Data Security Strategies for a Gen AI World

#17 Is Copilot worth the investment?

Like any other AI solution, Copilot is only as valuable as the data being accessed. Restricting whole sites and cutting off data access with a large, unwieldy axe can limit the value of Copilot. An intelligent data access program can more surgically control permissions without reducing value.

Investing in Copilot can greatly benefit organizations looking to enhance productivity and streamline operations. The tool is designed to integrate seamlessly with existing Microsoft 365 applications, providing users with AI-driven assistance that can automate routine tasks and improve efficiency. However, it is essential to address security and governance concerns to ensure the deployment is effective and secure.

Moreover, organizations with strong information governance practices are likely to see better adoption and utility of Copilot. By focusing on mitigating risks such as oversharing and misinformation, companies can maximize the value of their investment. Continuous monitoring and adaptive governance are crucial to maintaining the security and effectiveness of Copilot in the long term.

AI compliance questions

#18 Are there regulatory requirements an organization must comply with before launching AI?

Regulatory requirements, in many cases, follow the data. All data sets or information systems within the scope of a currently applicable regulation will, in turn, cause those requirements to be inherited by the AI systems accessing that data.

For example, under the Cybersecurity Maturity Model Certification (CMMC) Program, organizations must validate through assessment that they are protecting Federal Contract Information (FCI) and Controlled Unclassified Information (CUI). An organization with CMMC requirements must apply MFA to all systems containing CUI for users to access them. Then they would furthermore need to require MFA for users accessing AI applications that reason over that same data.

Even if the organization has written documentation and policies prohibiting the use of CUI by an LLM or AI application, it must comply with the requirements to establish and maintain baseline configurations that prevent users from doing so. Also, it would need to review logged events like AI agent prompts and results to determine if CUI was accessed.

There are currently no regulations explicitly dedicated to AI that are fully established by law, enforcement, and penalty. The EU AI Act, for instance, has select chapters currently in place, but not others. Additionally, there is no enforcement body at this time.

It’s also important to recognize that many of the developing regulations honed in on AI mostly address AI and LLM offerings created to be consumed by external parties (e.g., a direct-to-consumer application like ChatGPT and how OpenAI develops and manages it). Previous US Executive Orders and others incorporate regulations that are orientated towards bias and decision-making.

#19 Will there be an increase in the value of obtaining an AI management certification like ISO 42001?

In most cases, the value associated with a certificate or accreditation will not change consumer or customer behavior for organizations. There will be little business value in terms of brand, marketability, or revenue. However, practically, there is immense value in undergoing ISO 42001 training and the certification process because the byproduct of that exercise is documentation.

It is very challenging for organizations to create thoughtfully written policies, define and assign the right roles and responsibilities, and deploy technical risk management solutions from scratch. Therefore, a framework like ISO 42001 provides an outline suitable for almost any organization, regardless of size or industry, to define their AI management program. Many regulations are increasing their requirements on documentation, such as HIPAA, and the artifacts created by ISO 42001 preparation will, in turn, help the organization meet other regulatory requirements.

The certification process also unearths risks due to the analysis, discovery, and multiple perspectives required during the certification. When conducting a Varonis Data Risk Assessment (DRA), customers often find previously unknown risks. Each time an organization can dissect its own safeguards and practices is an opportunity to grow and modify its system security plan.

Questions about AI-powered tools and automation

#20 What are the right questions to ask when assessing a vendor that uses AI?

The right questions can depend on your entity’s privacy and/or regulatory requirements. In general, you should ask questions about the following areas:

- What data will the vendor be processing, where is that data being processed (residency), and how is it being processed?

- Will data used by the machine learning or AI solution be available to other entities or users outside my organization? Will it be used to train a model? If so, how?

- What laws and regulations do you currently comply or align with, and do you have documentation or documented agreements to this effect?

- What security architecture is embedded in the use and development of AI? Does the company have mitigations in place and the ability to detect vulnerabilities and/or threats to the AI system(s)?

#21 Can you use AI for security automation? What are the impacts?

At Varonis, our Managed Data Detection and Response (MDDR) customers use our AI-powered Data Security Platform to detect and respond to leading data threats with significant speed and efficiency. In addition, our MDDR offering can integrate with varying EDR and XDR solutions for easy AI querying against multiple sets of threat telemetry.

There are early signs that analysts and practitioners can effectively query wide sources of organizational data and external threat intelligence to identify and respond to threats faster. Also, repetitive tasks and alert noise are being eliminated for analysts

#22 What's your view on AI-powered tools used in shadow IT?

Two risky scenarios are developing in the enterprise IT landscape. The first is shadow AI, which I recently discussed on LinkedIn, and the second is shadow IT applications that use AI and use organizational data in the process.

Gartner predicted in 2016 that by 2020 that a third of successful attacks experienced by enterprises will be on their shadow IT resources. This prediction still stands in 2025, where we now see 'shadow AI' becoming a leading attack vector and risk for data exposure. DeepSeek is one of the most recent and noteworthy examples. Still, users will continue to test and explore novel AI applications if given the opportunity or when management tools do not prevent them.

Ultimately, it’s important that organizations provide safe and secure options to their users and developers and mitigate the need to try other options. A clear path to test and gain approval for new AI solutions also needs to be clear.

Reduce your risk without taking any.

This list of AI security questions is not exhaustive but includes some of the frequently asked questions we receive at Varonis. We revise and add to this blog consistently as technology, regulations, and threats change.

Don't see your question answered? Contact our team so we can change that.

Ready to safely begin your AI journey? Get started with Varonis free Data Risk Assessment. In less than 24 hours, you’ll have a clear, risk-based view of the data that matters most and a clear path to automated remediation.

What should I do now?

Below are three ways you can continue your journey to reduce data risk at your company:

Schedule a demo with us to see Varonis in action. We'll personalize the session to your org's data security needs and answer any questions.

See a sample of our Data Risk Assessment and learn the risks that could be lingering in your environment. Varonis' DRA is completely free and offers a clear path to automated remediation.

Follow us on LinkedIn, YouTube, and X (Twitter) for bite-sized insights on all things data security, including DSPM, threat detection, AI security, and more.

-1.png)