Machine Learning and Generative AI Use in Varonis

1. Responsible AI Guiding Principles

AI is a broad term that includes machine learning, Large and Small Language Models (LLMs), and statistical algorithms.

Varonis has adopted a set of responsible AI guiding principles, that guide all the AI based technology.

1.1. Customer Data is not used for Training AI Models: Varonis does not train models on customer data; instead, it uses other sets of data, which are not based on customer data or are anonymized and derived indicators for model training.

1.2. Transparency: Varonis is transparent and documents which AI models are used for various features and how they are being used. Such transparency is accomplished both through the information provided by Varonis to its customers and through the product.

1.3. Data residence: As a principle Varonis ensures that customer data sent to AI complies with data residence per the customer’s choice of data locality, with some exceptions. See details in in the privacy whitepaper and in the Varonis Data Processing Addendum.

1.4. Opt-out: The use of GenAI is tied to specific features and products. Customers that do not wish to use those GenAI based features can disable the functionality (for GenAI features used by the customer) or avoid licensing specific services or platforms (for GenAI used by Varonis).

1.5. Explainability: When technically feasible, Varonis provides explainability of model decision as part of data evaluation by model.

1.6. Model Development Lifecycle: Varonis uses a structured model development lifecycle, which includes:

a. Tracking of which data was used for each model version training

b. Versioning of the models

c. Testing models for precision

d. Penetration testing of models

e. Testing models for fairness and safety

f. Rollout & Test New Model Versions and test for precision.

g. Applies to custom and 3rd party models.

h. Auditing all model evaluations

i. Monitoring of model performance in production to detect drifts from precision

2. Varonis Features that Utilize AI

2.1. Attack Detection

Supervised Machine Learning for Threat Detection

Varonis employs machine learning, a subset of artificial intelligence (AI), to enhance cybersecurity in various domains. Machine learning uses algorithms and statistical techniques to enable computer systems to learn from data and make predictions or decisions without explicit programming.

a. Generating Security Alerts Based on Abnormal User Behavior: One crucial application of machine learning in cybersecurity is detecting security threats by analyzing user behavior. Varonis uses machine learning algorithms to monitor and analyze user or service account activities within an organization's network. By learning what constitutes "normal" behavior for users and service accounts, the system can identify deviations from these patterns, flagging them as potential security threats. These deviations can include unusual file access, login times, or data transfers, helping organizations respond promptly to potential breaches.

b. Learning Peers’ Association: Another important aspect of threat detection is understanding the relationships between users and their peers within the network. Varonis' machine learning models can learn and identify which users typically interact or share data with each other. This information helps in detecting suspicious activities, such as unauthorized data sharing or privilege escalation.

c. Learning Normal Working Hours: Machine learning is used to establish typical working hours for users within an organization. By analyzing historical data, the system can identify when users typically access resources, and any deviations from these patterns can trigger alerts. For example, if a user logs in during an unusual time, it could indicate a security incident.

d. Personal Devices Identification: Varonis' machine learning algorithms can also learn and keep track of the devices being used by each user. This information is crucial for detecting unauthorized access or compromised devices. The system can raise a security alert if a user suddenly logs in from an unfamiliar device.

Unsupervised Machine Learning for Threat Detection

We are using unsupervised machine learning, which deals with unlabeled data. The machine learning (ML) algorithms are not provided with explicit output labels. Instead, they discover patterns, structures, or relationships within the data.

Unsupervised machine learning plays a crucial role in cybersecurity threat detection, and it is the preferred option due to its ability to address certain challenges unique to the cybersecurity domain.

Some examples are:

a. Anomaly Detection: Unsupervised machine learning is particularly well-suited for anomaly detection in cybersecurity. Anomalies in network traffic, system behavior, or user activities can indicate security threats such as intrusions or breaches. Since these anomalies often represent previously unknown attack patterns, using supervised learning with pre-labeled data is impractical. Unsupervised learning algorithms can identify deviations from established patterns without knowing what constitutes a threat.

b. Data Exploration and Discovery: Cybersecurity datasets are vast and diverse, containing a wide range of data types, including logs, network traffic, system configurations, and user behavior. Unsupervised learning techniques, like clustering and dimensionality reduction, help to understand these complex datasets. They can reveal patterns, group similar events together, and reduce the data's dimensionality to focus on the most relevant features.

c. Zero-Day Attacks: Zero-day attacks involve exploiting vulnerabilities that are previously unknown. Since there are no predefined attack signatures or labels for these threats, unsupervised learning is crucial. Anomaly detection algorithms can identify unexpected and potentially malicious activities, providing an early warning system against emerging threats.

d. Insider Threat Detection: Detecting insider threats, where legitimate users abuse their access, often requires unsupervised learning. These threats are challenging to identify using supervised learning because malicious insiders can act within the bounds of their legitimate permissions. Unsupervised techniques can help by modeling normal user behavior and flagging deviations that might indicate insider threats.

e. Continuous Learning: The threat landscape is constantly evolving, and new attack techniques emerge regularly. Unsupervised learning models can adapt to changing conditions and identify novel threats as they arise, making them suitable for continuous monitoring and detection.

Which data is used for threat detection based on pre-trained models?

The main categories of inputs are elaborated below:

a. Monitored Events:

+ File Servers: Events raised by file servers provide insights into file access, modification, and sharing activities. This data is vital for detecting suspicious or unauthorized file access, insider threats, and data exfiltration attempts.

+ Network Traffic: Events from network devices such as DNS, firewalls, proxies, and VPNs offer information about network communication patterns. Analyzing these events can reveal potential anomalies, intrusions, or malicious traffic attempting to breach the network.

+ SaaS Services: Events from SaaS services like Microsoft 365, Zoom, Okta, and others are essential for monitoring user activities within cloud-based applications. Detecting unusual activities, login attempts, or data access patterns in these services helps identify potential cyber threats in the cloud environment.

+ Mail Servers: Events from mail servers like Exchange and Exchange Online provide insights into email communications. Analyzing these events can help identify email-based threats such as phishing, malware attachments, or suspicious email forwarding.

b. Entity Data:

+ User Information: User data, such as login activity, access privileges, and historical behavior, is used to create user profiles. Machine learning models can detect anomalies in user behavior, identifying potentially compromised accounts or insider threats.

+ Device Information: Information about servers and endpoints, including their configurations and patch levels, helps assess vulnerabilities and potential attack surfaces. Anomalies in device behavior or configurations can indicate security risks.

+ IP/Domain/URL Information: Monitoring IP addresses, domains, and URLs is essential for detecting malicious network activity, including connections to known malicious hosts, suspicious domain registrations, or attempts to access malicious websites.

+ Threat Intelligence: Incorporating threat intelligence feeds, which provide real-time information on known threats and vulnerabilities, helps cybersecurity systems stay updated and respond promptly to emerging threats. Machine learning models can leverage this intelligence to identify and mitigate threats based on known attack patterns.

2.2 Athena AI – Generative AI Assisted Alert Investigation

Athena AI assists SOC to use Varonis systems using natural language queries to understand posture of the data and conduct investigation of security alerts. To improve AI accuracy, when Athena AI is asked a question, Varonis determines organizational context by retrieving from the customer metadata information, which is relevant to the query – information about accounts, roles, resources, permissions and classification, and known alerts. Then, the questions sent to AI are augmented with the retrieved “organizational context,” allowing Athena AI to be aware of the specific customer environment and provide highly relevant, precise, and tailored answers.

Which Generative AI models Athena AI Uses?

Varonis does not currently train custom Generative AI (LLM) models and relies on Azure OpenAI.

For Large Language Models, Varonis is currently using OpenAI gpt4, gpt4o, gpt4o_mini and gpt4o1.

2.3 Managed Data Detection and Response

Customers that purchase MDDR license from Varonis are relying on Varonis security organization to monitor their data and investigate alerts.

As part of alert investigations, MDDR specialists utilize Athena AI technology to investigate alerts more quickly, verify Athena AI's recommendations' correctness and implement remediation.

There is no way to turn off usage of Athena AI for MDDR. Customer that does not wish to use GenAI should avoid purchasing MDDR license from Varonis.

2.4 AI Based Data Classification

Model Training

Varonis creates classification models based on public and licensed data sets, never training models on customer data. We utilize versioning of the models and data sets, to ensure traceability of each model.

Classification

Analyzing the content of files is crucial for assessing their risk and sensitivity. Machine learning and Generative AI models can classify files based on content, identifying documents that contain sensitive information, personally identifiable information (PII), or that violate data policies like GDPR, HIPAA, PCI DSS, or CCPA. This classification enables proactive data protection and compliance enforcement.

Explainability – File Analysis

Varonis utilizes a combination of traditional machine learning and Large Language Model queries in order to classify data contents. As part of that Varonis sends samples of the data to OpenAI LLMs. The samples are not stored in either Varonis SaaS or OpenAI.

Each classification has explainability features, provided via File Analysis, which explain why the models reached a specific classification decision.

2.5 Varonis AI Email Interceptor

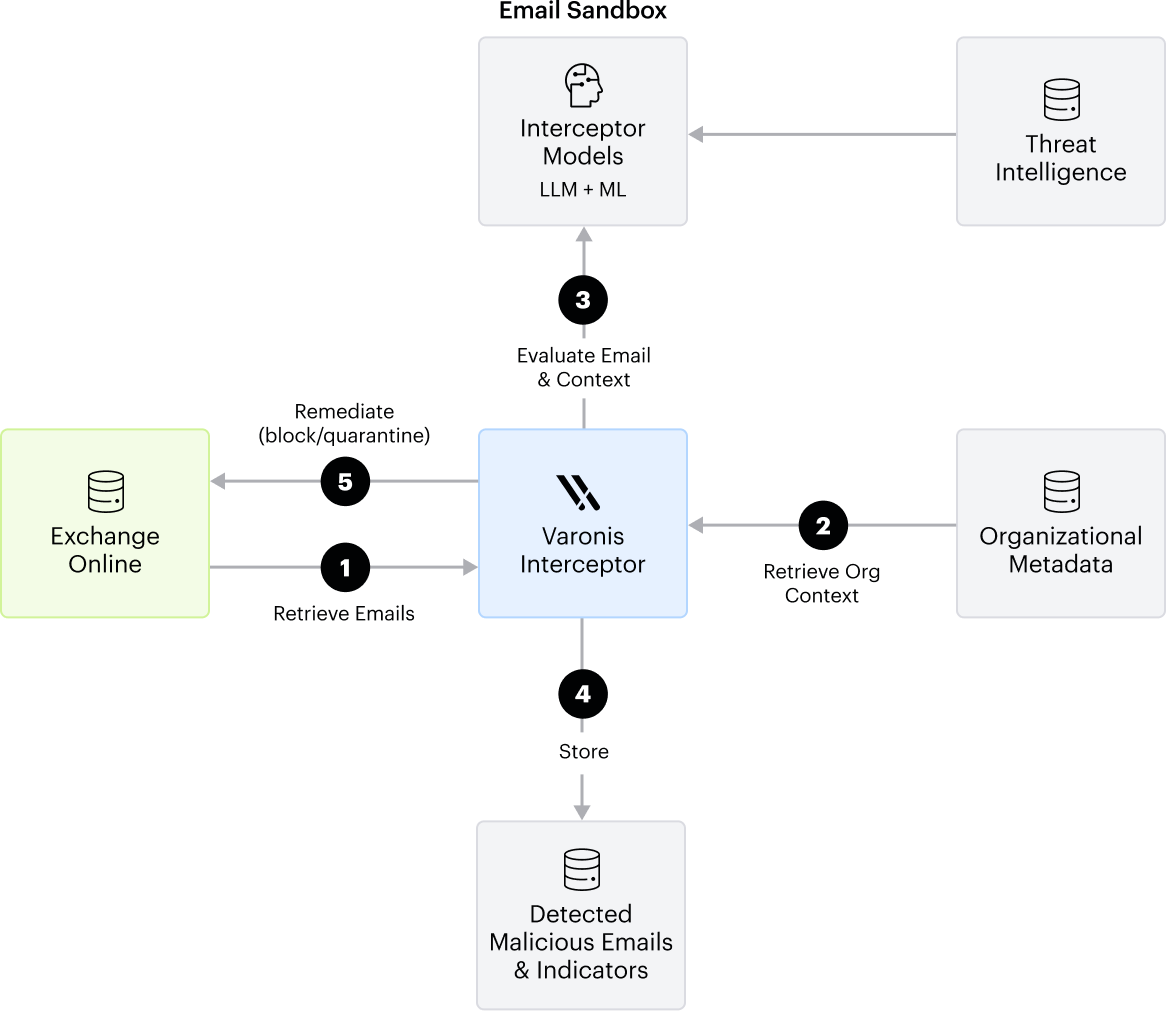

a. Email Blocking: The Varonis Email Interceptor integrates with the customer’s Microsoft Exchange Online environment to analyze emails delivered to user mailboxes. It identifies and removes emails containing detected threats.

As part of the detection process, each email is:

+ Augmented with enriched organizational context collected from the customer’s environment;

+ Evaluated using proprietary LLM and ML models hosted within the Varonis data center; and

+ Enhanced with external threat intelligence feeds, which are retrieved and incorporated as additional context during evaluation.

Blocked emails and their associated indicators of maliciousness are securely stored to support customer-side investigation and incident response.

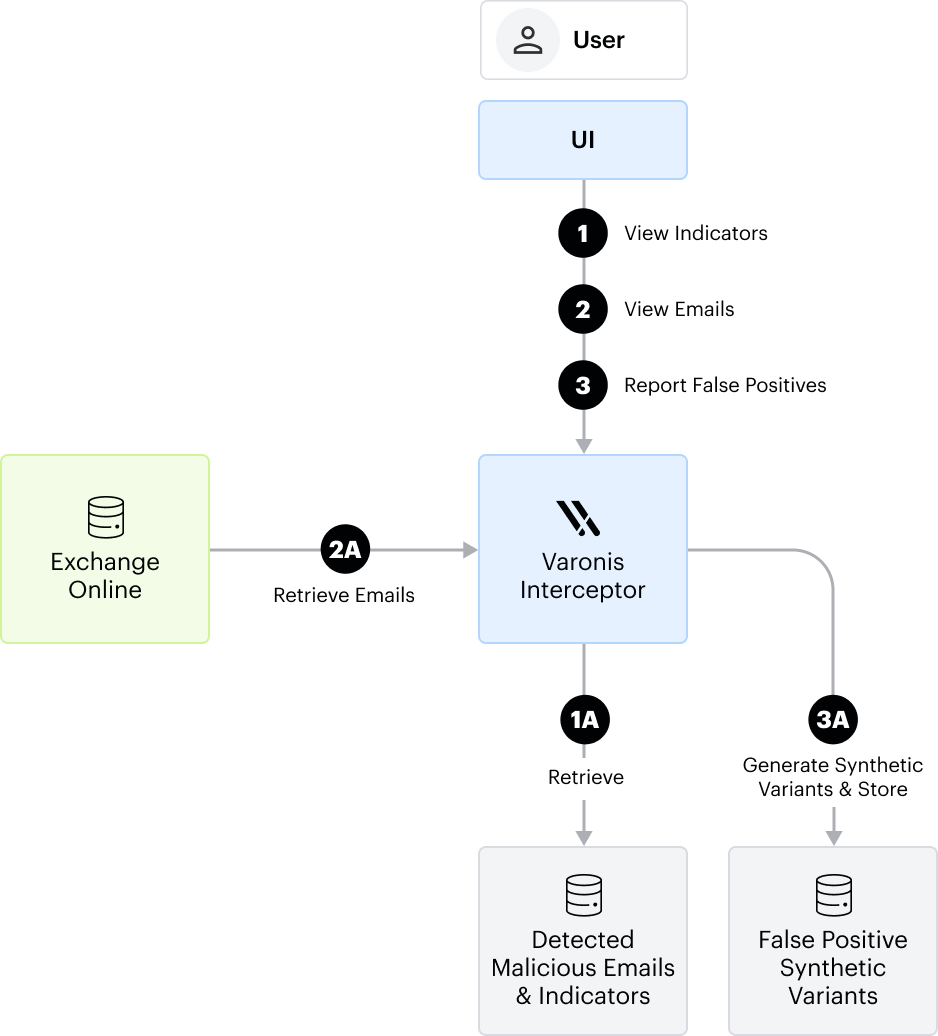

b. Investigation & False Positive Reporting: The Customer’s SOC team can connect to the Varonis Email Interceptor to investigate the email-based attacks.

Blocked emails and their associated indicators are stored at the time of interception, enabling thorough review and analysis. In addition, customers can search for and retrieve benign emails. These emails are not stored within the Varonis Email Interceptor and are instead fetched on demand from the customer’s Microsoft Exchange environment.

If a customer reports an email as a false positive, the Varonis Email Interceptor solution will remove it from the repository of stored malicious emails. Based on the indicators extracted from the reported email, the Varonis Email Interceptor solution will generate a set of synthetic variants. These variants are used to enhance detection accuracy and reduce the likelihood of future false positives. For clarification, synthetic variants do not include any content from the original false positive emails.

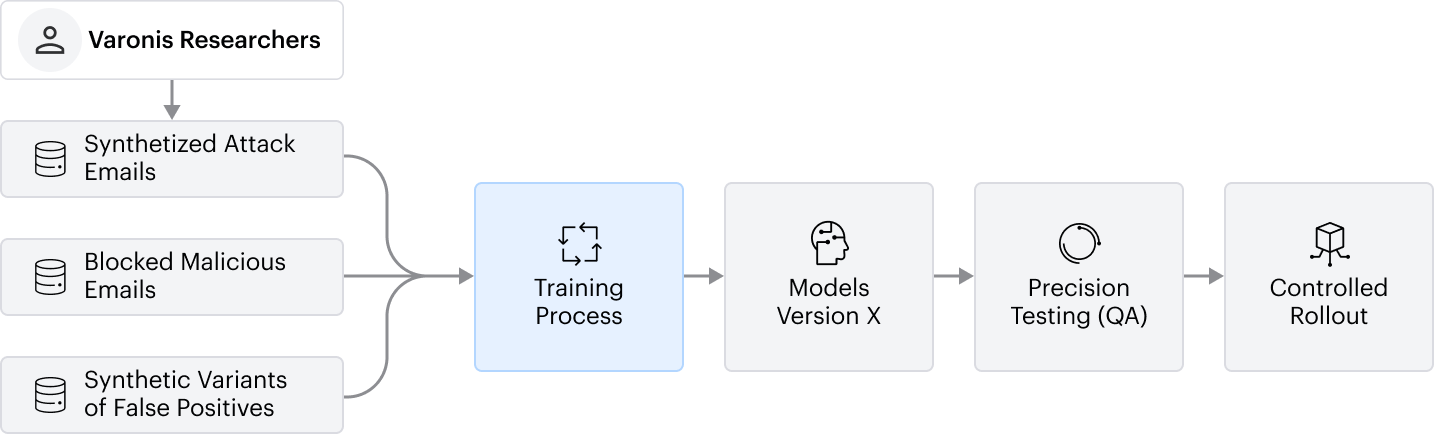

c. Model Training: ML & LLM models are periodically retrained to maintain optimal detection performance and adaptability to evolving threats. Model training is based on three primary data sources:

+ Synthetized attack emails created by Varonis’ security researchers.

+ Blocked malicious emails, which reflect the continuously evolving threat landscape.

+ Synthetic variants of reported false positives, designed to reduce their weights and prevent recurrence. These variants are generated without incorporating any content from the original false positive emails.

All the above enables Varonis to train Varonis Interceptor models without using customer data, while addressing rapidly evolving attack landscape and maintaining low false-positive rate.

Following retraining, models undergo testing and roll-out according to the Model Development Lifecycle, as outlined above. This includes precision testing, controlled rollout across customer tenants, and continuous drift monitoring to ensure stability and accuracy.

3. FAQs

Cybersecurity is a dynamic field due to the continuous evolution of cyber threats. Hackers are always coming up with new techniques and strategies to bypass security measures. Hence, the attack patterns change constantly. This means that the type of cyberattack one customer experiences could be very different from what another customer encounters. Even large customers or organizations, with their vast and complex networks, may not face every type of cyberattack.

To build robust defense systems against this wide array of cyber threats, we use machine learning models. These models are designed to learn from data and improve over time. However, the models need to be trained on relevant data to be effective.

This is where the aggregated and anonymized data from all customers comes into play. By collecting data about cyberattacks from a large number of customers, we provide a comprehensive picture of the various attack patterns. This data is anonymized to ensure customer privacy is maintained.

The machine learning models are then trained on this anonymized and aggregated data. This way, the models can learn from a wide range of attack patterns, not just those experienced by a single customer. As a result, these models can better predict and identify different types of cyberattacks, thereby providing stronger protection.

Once trained, these models are then used to protect all customers. Regardless of the type of attacks a customer has previously faced, they benefit from the collective learning of the models. This approach allows Varonis to stay one step ahead of hackers and offer their customers the best possible protection against cyber threats.

Customer textual data is sent to LLMs to provide product functionality for your benefit. No customer textual data is ever used to train our models. We can also use textual data for support. We do not retain or use it for other purposes. Varonis uses raw textual data to derive anonymized numerical risk indicators, on which models are then trained.

Varonis performs extensive testing prior to releasing AI features, ensuring high precision and accuracy of all features. In addition, because models can drift over time, Varonis monitors their performance in production to detect and enhance such drifts. Varonis also controls “temperature mode” of the models, which reduces hallucinations, balancing it with precision.

That said, the nature of generative AI is that responses may not be accurate or even plain wrong. Therefore, a human should always review the recommended remediation actions prior to implementing them.

Varonis' Data Security Platform is built on top of Microsoft Azure OpenAI and uses the latest and best public LLM models provided via the Azure OpenAI platform. We have a written contract with Microsoft, which ensures that Microsoft, as a sub processor, is compliant with our security policies and is not retaining our customer data or using it to train models.

Varonis utilizes Azure OpenAI built-in safeties at both the training and serving stages.

Yes. Varonis stores data in the geography selected by customers and does not transfer any customer data outside of that selected geography, unless indicated otherwise in Varonis Data Processing Agreement and subprocessor list.

Yes. You can opt in and out of using gen AI features at any time via the settings screen on Varonis’ Web Data Analytics user interface.

Note that it is not possible to opt-out of MDDR’s utilization of Gen AI. If you do not wish to use GenAI, you should not license MDDR.

Varonis does not license or sell its models and only uses them to protect its customers.

Varonis AI and ML models are continuously scanned against OWASP Machine Learning Security:

a. https://mltop10.info/about_owasp.html

b. https://owasp.org/www-project-ai-security-and-privacy-guide/

This includes:

1. Guardrails provide by OpenAI

2. Internal and external Penetration testing conducted by Varonis

3. Sanitation against Prompt injection

4. Periodic human review of the logs

Our entire infrastructure is monitored by the Varonis SOC and compliant with ISO, HIPAA, PCI, and AICPA requirements.

Varonis has signed the CSA AI Trustworthy Pledge to demonstrate our commitment to developing and providing responsible AI systems. This pledge highlights our alignment with guiding AI principles such as transparency, ethical accountability, safety, and privacy.

Have questions? Contact us.

Have questions? Contact us.