This article is part of the series "Practical PowerShell for IT Security". Check out the rest:

Last time, with a few lines of PowerShell code, I launched an entire new software category, File Access Analytics (FAA). My 15-minutes of fame is almost over, but I was able to make the point that PowerShell has practical file event monitoring aspects. In this post, I’ll finish some old business with my FAA tool and then take up PowerShell-style data classification.

Event-Driven Analytics

To refresh memories, I used the Register-WmiEvent cmdlet in my FAA script to watch for file access events in a folder. I also created a mythical baseline of event rates to compare against. (For wonky types, there’s a whole area of measuring these kinds of things — hits to web sites, calls coming into a call center, traffic at espresso bars — that was started by this fellow.)

Get the Free PowerShell and Active Directory Essentials Video Course

When file access counts reach above normal limits, I trigger a software-created event that gets picked up by another part of the code and pops up the FAA “dashboard”.

This triggering is performed by the New-Event cmdlet, which allows you to send an event, along with other information, to a receiver. To read the event, there’s the WMI-Event cmdlet. The receiving part can even be in another script as long as both event cmdlets use the same SourceIdentifier — Bursts, in my case.

These are all operating systems 101 ideas: effectively, PowerShell provides a simple message passing system. Pretty neat considering we are using what is, after all, a bleepin’ command language.

Anyway, the full code is presented below for your amusement.

$cur = Get-Date

$Global:Count=0

$Global:baseline = @{"Monday" = @(3,8,5); "Tuesday" = @(4,10,7);"Wednesday" = @(4,4,4);"Thursday" = @(7,12,4); "Friday" = @(5,4,6); "Saturday"=@(2,1,1); "Sunday"= @(2,4,2)}

$Global:cnts = @(0,0,0)

$Global:burst = $false

$Global:evarray = New-Object System.Collections.ArrayList

$action = {

$Global:Count++

$d=(Get-Date).DayofWeek

$i= [math]::floor((Get-Date).Hour/8)

$Global:cnts[$i]++

#event auditing!

$rawtime = $EventArgs.NewEvent.TargetInstance.LastAccessed.Substring(0,12)

$filename = $EventArgs.NewEvent.TargetInstance.Name

$etime= [datetime]::ParseExact($rawtime,"yyyyMMddHHmm",$null)

$msg="$($etime)): Access of file $($filename)"

$msg|Out-File C:\Users\bob\Documents\events.log -Append

$Global:evarray.Add(@($filename,$etime))

if(!$Global:burst) {

$Global:start=$etime

$Global:burst=$true

}

else {

if($Global:start.AddMinutes(15) -gt $etime ) {

$Global:Count++

#File behavior analytics

$sfactor=2*[math]::sqrt( $Global:baseline["$($d)"][$i])

if ($Global:Count -gt $Global:baseline["$($d)"][$i] + 2*$sfactor) {

"$($etime): Burst of $($Global:Count) accesses"| Out-File C:\Users\bob\Documents\events.log -Append

$Global:Count=0

$Global:burst =$false

New-Event -SourceIdentifier Bursts -MessageData "We're in Trouble" -EventArguments $Global:evarray

$Global:evarray= [System.Collections.ArrayList] @();

}

}

else { $Global:burst =$false; $Global:Count=0; $Global:evarray= [System.Collections.ArrayList] @();}

}

}

Register-WmiEvent -Query "SELECT * FROM __InstanceModificationEvent WITHIN 5 WHERE TargetInstance ISA 'CIM_DataFile' and TargetInstance.Path = '\\Users\\bob\\' and targetInstance.Drive = 'C:' and (targetInstance.Extension = 'txt' or targetInstance.Extension = 'doc' or targetInstance.Extension = 'rtf') and targetInstance.LastAccessed > '$($cur)' " -sourceIdentifier "Accessor" -Action $action

#Dashboard

While ($true) {

$args=Wait-Event -SourceIdentifier Bursts # wait on Burst event

Remove-Event -SourceIdentifier Bursts #remove event

$outarray=@()

foreach ($result in $args.SourceArgs) {

$obj = New-Object System.Object

$obj | Add-Member -type NoteProperty -Name File -Value $result[0]

$obj | Add-Member -type NoteProperty -Name Time -Value $result[1]

$outarray += $obj

}

$outarray|Out-GridView -Title "FAA Dashboard: Burst Data"

}

- $cur = Get-Date

- $Global:Count=0

- $Global:baseline = @{"Monday" = @(3,8,5); "Tuesday" = @(4,10,7);"Wednesday" = @(4,4,4);"Thursday" = @(7,12,4); "Friday" = @(5,4,6); "Saturday"=@(2,1,1); "Sunday"= @(2,4,2)}

- $Global:cnts = @(0,0,0)

- $Global:burst = $false

- $Global:evarray = New-Object System.Collections.ArrayList

- $action = {

- $Global:Count++

- $d=(Get-Date).DayofWeek

- $i= [math]::floor((Get-Date).Hour/8)

- $Global:cnts[$i]++

- #event auditing!

- $rawtime = $EventArgs.NewEvent.TargetInstance.LastAccessed.Substring(0,12)

- $filename = $EventArgs.NewEvent.TargetInstance.Name

- $etime= [datetime]::ParseExact($rawtime,"yyyyMMddHHmm",$null)

- $msg="$($etime)): Access of file $($filename)"

- $msg|Out-File C:\Users\bob\Documents\events.log -Append

- $Global:evarray.Add(@($filename,$etime))

- if(!$Global:burst) {

- $Global:start=$etime

- $Global:burst=$true

- }

- else {

- if($Global:start.AddMinutes(15) -gt $etime ) {

- $Global:Count++

- #File behavior analytics

- $sfactor=2*[math]::sqrt( $Global:baseline["$($d)"][$i])

- if ($Global:Count -gt $Global:baseline["$($d)"][$i] + 2*$sfactor) {

- "$($etime): Burst of $($Global:Count) accesses"| Out-File C:\Users\bob\Documents\events.log -Append

- $Global:Count=0

- $Global:burst =$false

- New-Event -SourceIdentifier Bursts -MessageData "We're in Trouble" -EventArguments $Global:evarray

- $Global:evarray= [System.Collections.ArrayList] @();

- }

- }

- else { $Global:burst =$false; $Global:Count=0; $Global:evarray= [System.Collections.ArrayList] @();}

- }

- }

- Register-WmiEvent -Query "SELECT * FROM __InstanceModificationEvent WITHIN 5 WHERE TargetInstance ISA 'CIM_DataFile' and TargetInstance.Path = '\\Users\\bob\\' and targetInstance.Drive = 'C:' and (targetInstance.Extension = 'txt' or targetInstance.Extension = 'doc' or targetInstance.Extension = 'rtf') and targetInstance.LastAccessed > '$($cur)' " -sourceIdentifier "Accessor" -Action $action

- #Dashboard

- While ($true) {

- $args=Wait-Event -SourceIdentifier Bursts # wait on Burst event

- Remove-Event -SourceIdentifier Bursts #remove event

- $outarray=@()

- foreach ($result in $args.SourceArgs) {

- $obj = New-Object System.Object

- $obj | Add-Member -type NoteProperty -Name File -Value $result[0]

- $obj | Add-Member -type NoteProperty -Name Time -Value $result[1]

- $outarray += $obj

- }

- $outarray|Out-GridView -Title "FAA Dashboard: Burst Data"

- }

$cur = Get-Date

$Global:Count=0

$Global:baseline = @{"Monday" = @(3,8,5); "Tuesday" = @(4,10,7);"Wednesday" = @(4,4,4);"Thursday" = @(7,12,4); "Friday" = @(5,4,6); "Saturday"=@(2,1,1); "Sunday"= @(2,4,2)}

$Global:cnts = @(0,0,0)

$Global:burst = $false

$Global:evarray = New-Object System.Collections.ArrayList

$action = {

$Global:Count++

$d=(Get-Date).DayofWeek

$i= [math]::floor((Get-Date).Hour/8)

$Global:cnts[$i]++

#event auditing!

$rawtime = $EventArgs.NewEvent.TargetInstance.LastAccessed.Substring(0,12)

$filename = $EventArgs.NewEvent.TargetInstance.Name

$etime= [datetime]::ParseExact($rawtime,"yyyyMMddHHmm",$null)

$msg="$($etime)): Access of file $($filename)"

$msg|Out-File C:\Users\bob\Documents\events.log -Append

$Global:evarray.Add(@($filename,$etime))

if(!$Global:burst) {

$Global:start=$etime

$Global:burst=$true

}

else {

if($Global:start.AddMinutes(15) -gt $etime ) {

$Global:Count++

#File behavior analytics

$sfactor=2*[math]::sqrt( $Global:baseline["$($d)"][$i])

if ($Global:Count -gt $Global:baseline["$($d)"][$i] + 2*$sfactor) {

"$($etime): Burst of $($Global:Count) accesses"| Out-File C:\Users\bob\Documents\events.log -Append

$Global:Count=0

$Global:burst =$false

New-Event -SourceIdentifier Bursts -MessageData "We're in Trouble" -EventArguments $Global:evarray

$Global:evarray= [System.Collections.ArrayList] @();

}

}

else { $Global:burst =$false; $Global:Count=0; $Global:evarray= [System.Collections.ArrayList] @();}

}

}

Register-WmiEvent -Query "SELECT * FROM __InstanceModificationEvent WITHIN 5 WHERE TargetInstance ISA 'CIM_DataFile' and TargetInstance.Path = '\\Users\\bob\\' and targetInstance.Drive = 'C:' and (targetInstance.Extension = 'txt' or targetInstance.Extension = 'doc' or targetInstance.Extension = 'rtf') and targetInstance.LastAccessed > '$($cur)' " -sourceIdentifier "Accessor" -Action $action

#Dashboard

While ($true) {

$args=Wait-Event -SourceIdentifier Bursts # wait on Burst event

Remove-Event -SourceIdentifier Bursts #remove event

$outarray=@()

foreach ($result in $args.SourceArgs) {

$obj = New-Object System.Object

$obj | Add-Member -type NoteProperty -Name File -Value $result[0]

$obj | Add-Member -type NoteProperty -Name Time -Value $result[1]

$outarray += $obj

}

$outarray|Out-GridView -Title "FAA Dashboard: Burst Data"

}

Please don’t pound your laptop as you look through it.

I’m aware that I continue to pop up separate grid views, and there are better ways to handle the graphics. With PowerShell, you do have access to the full .Net framework, so you could create and access objects —listboxes, charts, etc. — and then update as needed. I’ll leave that for now as a homework assignment.

Classification is Very Important in Data Security

Let’s put my file event monitoring on the back burner, as we take up the topic of PowerShell and data classification.

At Varonis, we preach the gospel of “knowing your data” for good reason. In order to work out a useful data security program, one of the first steps is to learn where your critical or sensitive data is located — credit card numbers, consumer addresses, sensitive legal documents, proprietary code.

The goal, of course, is to protect the company’s digital treasure, but you first have to identify it. By the way, this is not just a good idea, but many data security laws and regulations (for example, HIPAA) as well as industry data standards (PCI DSS) require asset identification as part of doing real-world risk assessment.

PowerShell should have great potential for use in data classification applications. Can PS access and read files directly? Check. Can it perform pattern matching on text? Check. Can it do this efficiently on a somewhat large scale? Check.

No, the PowerShell classification script I eventually came up with will not replace the Varonis Data Classification Framework. But for the scenario I had in mind – a IT admin who needs to watch over an especially sensitive folder – my PowerShell effort gets more than a passing grad, say B+!

WQL and CIM_DataFile

Let’s now return to WQL, which I referenced in the first post on event monitoring.

Just as I used this query language to look at file events in a directory, I can tweak the script to retrieve all the files in a specific directory. As before I use the CIM_DataFile class, but this time my query is directed at the folder itself, not the events associated with it.

$Get-WmiObject -Query "SELECT * From CIM_DataFile where Path = '\\Users\\bob\\' and Drive = 'C:' and (Extension = 'txt' or Extension = 'doc' or Extension = 'rtf')"

- $Get-WmiObject -Query "SELECT * From CIM_DataFile where Path = '\\Users\\bob\\' and Drive = 'C:' and (Extension = 'txt' or Extension = 'doc' or Extension = 'rtf')"

$Get-WmiObject -Query "SELECT * From CIM_DataFile where Path = '\\Users\\bob\\' and Drive = 'C:' and (Extension = 'txt' or Extension = 'doc' or Extension = 'rtf')"

Terrific! This line of code will output an array of file path names.

To read the contents of each file into a variable, PowerShell conveniently provides the Get-Content cmdlet. Thank you Microsoft.

I need one more ingredient for my script, which is pattern matching. Not surprisingly, PowerShell has a regular expression engine. For my purposes it’s a little bit of overkill, but it certainly saved me time.

In talking to security pros, they’ve often told me that companies should explicitly mark documents or presentations containing proprietary or sensitive information with an appropriate footer — say, Secret or Confidential. It’s a good practice, and of course it helps in the data classification process.

In my script, I created a PowerShell hashtable of possible marker texts with an associated regular expression to match it. For documents that aren’t explicitly marked this way, I also added special project names — in my case, snowflake — that would also get scanned. And for kicks, I added a regular expression for social security numbers.

The code block I used to do the reading and pattern matching is listed below. The file name to read and scan is passed in as a parameter.

$Action = {

Param (

[string] $Name

)

$classify =@{"Top Secret"=[regex]'[tT]op [sS]ecret'; "Sensitive"=[regex]'([Cc]onfidential)|([sS]nowflake)'; "Numbers"=[regex]'[0-9]{3}-[0-9]{2}-[0-9]{3}' }

$data = Get-Content $Name

$cnts= @()

foreach ($key in $classify.Keys) {

$m=$classify[$key].matches($data)

if($m.Count -gt 0) {

$cnts+= @($key,$m.Count)

}

}

$cnts

}

- $Action = {

- Param (

- [string] $Name

- )

- $classify =@{"Top Secret"=[regex]'[tT]op [sS]ecret'; "Sensitive"=[regex]'([Cc]onfidential)|([sS]nowflake)'; "Numbers"=[regex]'[0-9]{3}-[0-9]{2}-[0-9]{3}' }

- $data = Get-Content $Name

- $cnts= @()

- foreach ($key in $classify.Keys) {

- $m=$classify[$key].matches($data)

- if($m.Count -gt 0) {

- $cnts+= @($key,$m.Count)

- }

- }

- $cnts

- }

$Action = {

Param (

[string] $Name

)

$classify =@{"Top Secret"=[regex]'[tT]op [sS]ecret'; "Sensitive"=[regex]'([Cc]onfidential)|([sS]nowflake)'; "Numbers"=[regex]'[0-9]{3}-[0-9]{2}-[0-9]{3}' }

$data = Get-Content $Name

$cnts= @()

foreach ($key in $classify.Keys) {

$m=$classify[$key].matches($data)

if($m.Count -gt 0) {

$cnts+= @($key,$m.Count)

}

}

$cnts

}

Magnificent Multi-Threading

I could have just simplified my project by taking the above code and adding some glue, and then running the results through the Out-GridView cmdlet.

But this being the Varonis IOS blog, we never, ever do anything nice and easy.

There is a point I’m trying to make. Even for a single folder in a corporate file system, there can be hundreds, perhaps even a few thousand files.

Do you really want to wait around while the script is serially reading each file?

Of course not!

Large-scale file I/O applications, like what we’re doing with classification, is very well-suited for multi-threading—you can launch lots of file activity in parallel and thereby significantly reduce the delay in seeing results.

PowerShell does have a usable (if clunky) background processing system known as Jobs. But it also boasts an impressive and sleek multi-threading capability known as Runspaces.

After playing with it, and borrowing code from a few Runspaces’ pioneers, I am impressed.

Runspaces handles all the messy mechanics of synchronization and concurrency. It’s not something you can grok quickly, and even Microsoft’s amazing Scripting Guys are still working out their understanding of this multi-threading system.

In any case, I went boldly ahead and used Runspaces to do my file reads in parallel. Below is a bit of the code to launch the threads: for each file in the directory I create a thread that runs the above script block, which returns matching patterns in an array.

$RunspacePool = [RunspaceFactory]::CreateRunspacePool(1, 5)

$RunspacePool.Open()

$Tasks = @()

foreach ($item in $list) {

$Task = [powershell]::Create().AddScript($Action).AddArgument($item.Name)

$Task.RunspacePool = $RunspacePool

$status= $Task.BeginInvoke()

$Tasks += @($status,$Task,$item.Name)

}

- $RunspacePool = [RunspaceFactory]::CreateRunspacePool(1, 5)

- $RunspacePool.Open()

- $Tasks = @()

- foreach ($item in $list) {

- $Task = [powershell]::Create().AddScript($Action).AddArgument($item.Name)

- $Task.RunspacePool = $RunspacePool

- $status= $Task.BeginInvoke()

- $Tasks += @($status,$Task,$item.Name)

- }

$RunspacePool = [RunspaceFactory]::CreateRunspacePool(1, 5)

$RunspacePool.Open()

$Tasks = @()

foreach ($item in $list) {

$Task = [powershell]::Create().AddScript($Action).AddArgument($item.Name)

$Task.RunspacePool = $RunspacePool

$status= $Task.BeginInvoke()

$Tasks += @($status,$Task,$item.Name)

}

Let’s take a deep breath—we’ve covered a lot.

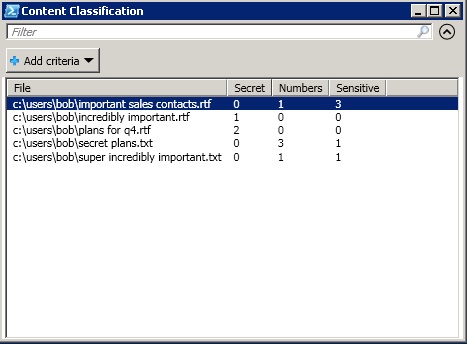

In the next post, I’ll present the full script, and discuss some of the (painful) details. In the meantime, after seeding some files with marker text, I produced the following output with Out-GridView:

In the meantime, another idea to think about is how to connect the two scripts: the file activity monitoring one and the classification script partially presented in this post.

After all, the classification script should communicate what’s worth monitoring to the file activity script, and the activity script could in theory tell the classification script when a new file is created so that it could classify it—incremental scanning in other words.

Sounds like I’m suggesting, dare I say it, a PowerShell-based security monitoring platform. We’ll start working out how this can be done the next time as well.

What should I do now?

Below are three ways you can continue your journey to reduce data risk at your company:

Schedule a demo with us to see Varonis in action. We'll personalize the session to your org's data security needs and answer any questions.

See a sample of our Data Risk Assessment and learn the risks that could be lingering in your environment. Varonis' DRA is completely free and offers a clear path to automated remediation.

Follow us on LinkedIn, YouTube, and X (Twitter) for bite-sized insights on all things data security, including DSPM, threat detection, AI security, and more.