Since the release of the National Institute of Standards and Technology’s (NIST) Cybersecurity Framework (CSF) in 2014, many organizations have integrated the Core Functions into their operations.

Fittingly, numerous organizations now use NIST CSF to address cybersecurity and data risks associated with AI deployments, especially Microsoft 365 Copilot rollouts.

These deployments come at a key time for security and risk managers. Gartner’s latest Microsoft 365 survey reveals that 82% of IT leaders rank M365 Copilot among the top three most valuable new features of M365.

Thankfully, you can apply NIST CSF 2.0 to Microsoft 365 Copilot and the underlying dependencies - such as Microsoft 365 permissions and data classification, and your company’s existing risk policies. In this blog, we’ll review how an organization approaches Identity, Protect, Detect, and Respond Functions and Categories.

Identify (ID)

The first area organizations will need to address is the Identify (ID) Function, especially the Asset Management (ID.AM) Category.

ID.AM necessitates organizations maintain an inventory of both software and SaaS offerings. This does not equate to a simple list of SaaS or PaaS applications in a tracker but instead should consist of documentation and reporting on new solutions like Microsoft 365 Copilot, along with several key details:

- The name and ID of the tenant or environment (if a multi-tenant organization) Copilot is hosted or deployed in

- The users licensed for Copilot and the process for users to request access or admins to remove access

- Which admins can provision licenses and change settings on the Copilot page in the Microsoft 365 admin center (Note: they must be global administrators in your M365 tenant to access this page)

- How you will assess (prior to deployment) and continually monitor risk management of the organization's use of Copilot

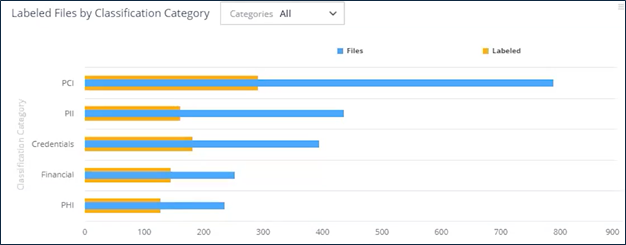

Subcategory ID.AM-07 also requires organizations to maintain an inventory of their data and corresponding metadata. This is especially important when rolling out an AI solution like Copilot. Varonis for Microsoft 365 allows customers to analyze their current labeling gaps at a high level and view what labels are in use.

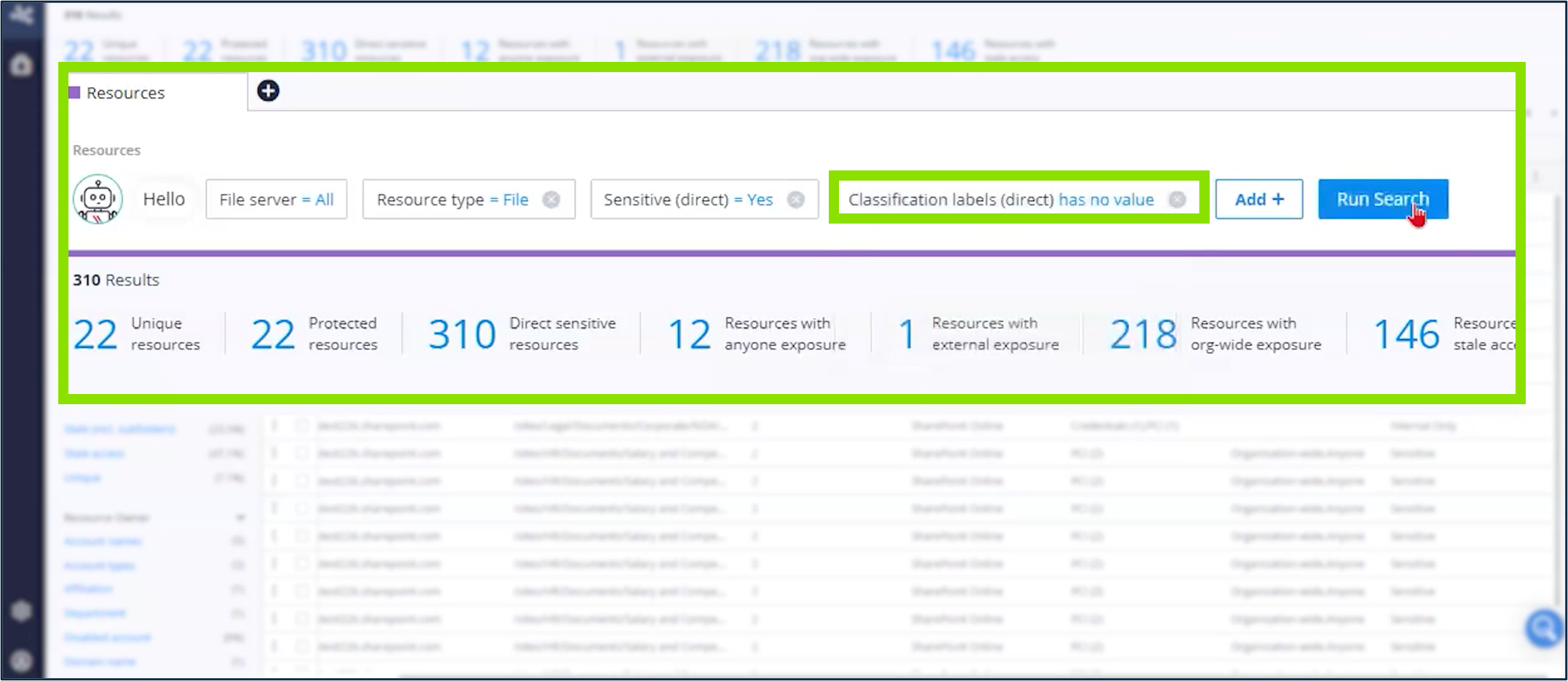

Furthermore, teams can dig into unlabeled content by using the intuitive search functionality in Varonis to identify what files are unlabeled and their locations in Microsoft 365, the file category or type, exposure levels, and other metadata.

Administrators can effortlessly save and schedule these searches for ongoing data monitoring with a single click. Without this, they would need to manually navigate the data catalog in Microsoft Purview or use the classic portal's content search to recreate or locate a recent search in "search history."

Protect (PR)

Organizations can visualize the sensitive data in their environment and maintain an inventory that is updated regularly with Varonis. Administrators can also manage permissions and assign data classification and labels with Varonis, directly or through automated rules.

Varonis can also uniquely re-label files if the user-applied label does not match the sensitive information found in the file. Applying labels in this way insures “access permissions, entitlements, and authorizations are enforced” (PR.AA-05) and the “confidentiality, integrity, and availability of data-at-rest and data-in-use are protected” (PR.DS-01 & PR.DS-10).

New data generated from Microsoft 365 Copilot prompts can be labeled automatically in two ways. First, if users upload a labeled sensitive file, any resulting files created through the prompt will follow the same label settings. This means all access controls and markings will align with those of the original file.

A second scenario occurs when a user inputs sensitive details into a prompt or discloses sensitive information from a prompt without uploading a file. In this case, any newly created file would adhere to the automatic labeling policies established by Varonis.

Lastly, Varonis for Copilot pulls in log records for continuous monitoring of prompts, responses, and the sensitive files accessed within a session (PR.PS-04). These logs are particularly valuable for internal security teams and external managed services providers as they look to address the Detect (DE) Function of NIST CSF 2.0.

Detect (DE) and Respond (RS)

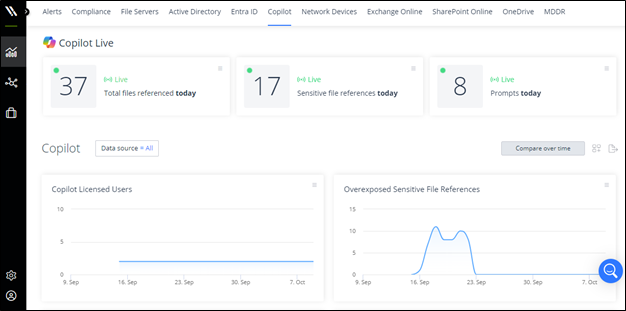

Organizations need a reliable solution to monitor user activity and Copilot usage to detect potentially adverse events (DE.CM-03 and DE.CM-09), especially those involving sensitive data.

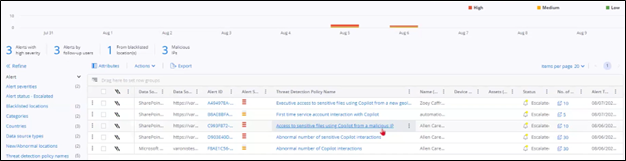

Security teams should also monitor unusual Copilot access patterns in addition to unusual prompts. Varonis for Copilot offers crucial visibility to data security professionals, enabling them to respond to sensitive data access and examine these adverse events when they occur (DE.AE-02).

Monitor Copilot use across the organization with a single pane of glass.

Monitor Copilot use across the organization with a single pane of glass.

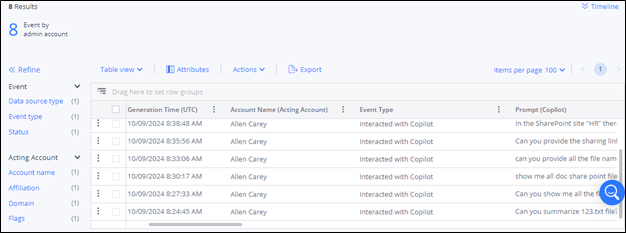

During analysis, teams can see where sensitive files are exposed based upon Copilot responses. However, it is worth noting that often a failed prompt that does not result in a sensitive response is equally telling about an insider threat.

In addition to Varonis’ powerful capabilities to surface and alert on Copilot activities, some organizations also use SIEM solutions such as Splunk or Microsoft Sentinel for correlation (DE.AE-03).

Varonis can stream to these services, as well as create tickets for ServiceNow, Jira, and more. Through these connections and native alerting, teams can categorize and prioritize incidents in the Varonis alerting tab (RS.MA-03). It’s also possible to see which incidents are escalated or elevated for tracking ongoing response activities (RS.MA-04).

AI data security is a new challenge to an old problem.

In late 2024, HackerOne released several early findings ahead of its annual AI security report, which included responses from 500 security leaders and practitioners.

The survey responses tell a similar story found in many Varonis Data Risk Assessments. Security and IT professionals are primarily worried about the leakage of training data, such as Microsoft 365 content used by Copilot (35%), and the unauthorized use of AI within their organizations that could access or expose this data (33%).

Yet, the root cause of these concerns is not representative of a new problem. Organizations largely do not have a solution set capable of visualizing their at-risk data or the permissions to that data, which is an old problem.

To understand, assess, and respond to the risks posed by adopting Microsoft 365 Copilot and other AI solutions in your supply chain (GV.SC-07), 68% of the aforementioned respondents said that an “external and unbiased review of AI implementations” is the best course of action.

Varonis works with existing SOC teams, MSPs, and MSSPs to conduct such a review of their current state. After deploying Varonis for Microsoft 365 and Copilot, these teams can operationalize these reviews on a regular basis.

No organization is immune to permissions and compliance drift; therefore, it’s imperative to also develop a risk management strategy that encompasses the before and after stages of a Copilot rollout. This 2,500+ employee company highlighted below is one example among many others proactively using Varonis to deploy Copilot safely.

Microsoft 365 Copilot will continue to evolve and gain traction in the market as users find value in the productivity tool. Security leaders looking to apply NIST CSF 2.0 and other cybersecurity frameworks to AI can rely on Varonis to prevent breaches and maintain compliance.

Ready to ensure a secure Microsoft 365 Copilot rollout? Request your free Copilot Security Scan today.

What should I do now?

Below are three ways you can continue your NIST CSF journey to reduce data risk at your company:

Schedule a demo with us to see Varonis in action. We'll personalize the session to your org's data security needs and answer any questions.

See a sample of our Data Risk Assessment and learn the risks that could be lingering in your environment. Varonis' DRA is completely free and offers a clear path to automated remediation.