Wade Baker is best known for creating and leading the Verizon Data Breach Investigations Report (DBIR). Readers of this blog are familiar with the DBIR as our go-to resource for breach stats and other practical insights into data protection. So we were very excited to listen to Wade speak recently at the O’Reilly Data Security Conference.

In his new role as partner and co-founder of the Cyentia Institute, Wade presented some fascinating research on the disconnect between CISOs and the board of directors. In short: if you can’t relate data security spending back to the business, you won’t get a green-light on your project.

We took the next step and contacted Wade for an IOS interview. It was a great opportunity to tap into his deep background in data breach analysis, and our discussion ranged over the DBIR, breach costs, phishing, and what boards look for in security products. What follows is a transcript based on my phone interview with Wade last month.

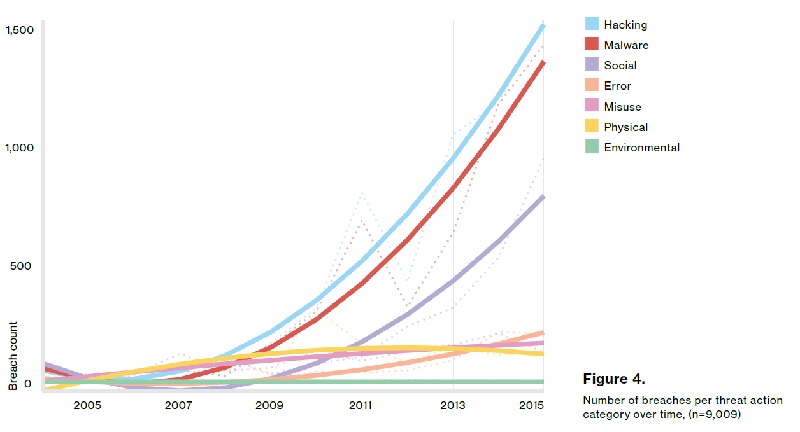

Inside Out Security: The Verizon Data Breach Investigations Report (DBIR) had been incredibly useful to me in understanding the real-world threat environment. I know one of the first things that caught my attention was that — I think this is pretty much a trend for the last five or six years — external threats or hackers certainly far outweigh insiders.

Wade Baker: Yeah.

IOS: But you’ll see headlines that say just the opposite, the numbers flipped around —‘like 70% of attacks are caused by insiders’. I was wondering if you had any comments on that and perhaps other data points that should be emphasized more?

WB: The whole reason that we started doing the DBIR in the first place, before it was ever a report, is just simply…I was doing a lot of risk-assessment related consulting. And it always really bothered me that I would be trying to make a case, ‘Hey, pay attention to this,’ and I didn’t have much data to back it up.

But there wasn’t really much out there to help me say, ‘This thing on the list is a higher risk because it’s, you know, much more likely to happen than this other thing right here.’

Interesting Breach Statistics

WB: Anyone who’s done those lists knows there’s a bunch of things on this list. When we started doing that, it was kind of a simple notion of, ‘All right, let me find a place where that data might exist, forensic investigations, and I’ll decompose those cases and just start counting things.’

Attributes of incidents, and insiders versus outsiders is one I had always heard —- like you said. Up until that point, 80% of all risk or 80% of all security incidents are insiders. And it’s one of those things that I almost consider it like doctrine at that time in the industry!

When we showed pretty much the exact opposite! This is the one stat that I think has made people the most upset out of my 10 years doing that report!

People would push back and kind of argue with things, but that is the one, like, claws came out on that one, like, ‘I can’t believe you’re saying this.’

There are some nuances there. For instance, when you study data breaches, then it does. Every single data set I ever looked at was weighted toward outsiders.

When you study all security incidence — no matter what severity, no matter what the outcome — then things do start leaning back toward insiders. Just when you consider all the mistakes and policy violations and, you know, just all that kind of junk.

IOS: Right, yes.

WB: I think defining terms is important, and one reason why there’s disagreement. Back to your question about other data points in the report that I love.

The ones that show the proportion of breaches that tie back to relatively simple attacks, which could have been thwarted by relatively cheap defenses or processes or technologies.

I think we tend to have this notion — maybe it’s just an excuse — that every attack is highly sophisticated and every fix is expensive. That’s just not the case!

The longer we believe those kind of things, I think we just sit back and don’t actually do the sometimes relatively simple stuff that needs to be done to address the real threat.

I love that one, and I also love the time to the detection. We threw that in there almost as a whim, just saying, ‘It seems like a good thing to measure about a breach.’

We wanted to see how long it takes, you know, from the time they start trying to link to it, and from the time they get inside to the time they find data, and from the time they find the data to exfiltrating it. Then of course how long it takes to detect it.

I think that was some of the more fascinating findings over the years, just concerning that.

IOS: I’m nodding my head about the time to discovery. Everything we’ve learned over the last couple of years seems to validate that. I think you said in one of your reports that the proper measurement unit is months. I mean, minimally weeks, but months. It seems to be verified by the bigger hacks we’ve heard about.

WB: I love it because many other people started publishing that same thing, and it was always months! So it was neat to watch that measurement vetted out over multiple different independent sources.

Breach Costs

IOS: I’m almost a little hesitant to get into this, but recently you started measuring breach cost based o proprietary insurance data. I’ve been following the controversy.

Could you just talk about it in general and maybe some of your own thoughts on the disparities we’ve been seeing in various research organizations?

WB: Yeah, that was something that for so long, because of where we got our information, it was hard to get all of the impact side out of a breach. Because you do a forensic investigation, you can collect really good info about how it happened, who did it, and that kind of thing, but it’s not so great six months or a year down the road.

You’re not still inside that company collecting data, so you don’t get to see the fallout unless it becomes very public (and sometimes it does).

We were able to study some costs — like the premier, top of line breach cost stats you always hear about from Ponemon.

IOS: Yes.

WB: And I’ve always had some issues with that, not to get into throwing shade or anything. The per record cost of a breach is not a linear type equation, but it’s treated like that.

What you get many times is something like an Equifax, 145 million records. Plus you multiply that by $198 per record, and we get some outlandish cost, and you see that cost quoted in the headlines. It’s just not how it works!

There’s a decreasing cost per record as you get to larger breaches, which makes sense.

There are other factors there that are involved. For instance, I saw a study from RAND, by Sasha Romanosky recently, where after throwing in predictors like company revenue and whether or not they’ve had a breach before — repeat offenders so to speak — and some other factors, then she really improves the cost prediction in the model.

I think those are the kind of things we need to be looking at and trying to incorporate because I think the number of records is probably, at best, describes about a third … I don’t even know if it gets to a half of the cost on the breach.

IOS: I did look at some of these reports andI’m a little skeptical about the number of records itself as a metric because it’s hard to know this, I think.

But if it’s something you do on a per incident basis, then the numbers look a little bit more comparable to Ponemon.

Do you think it’s a problem, looking at it on per record basis?

WB: First of all, an average cost per record, I would like to step away from that as a metric, just across the board. But tying cost to the number of records probably…I mean, it works better for, say, consumer data or payment card data or things like that where the costs are highly associated with the number of people affected. You then get into cost of credit monitoring and the notifications. All of those type things are certainly correlated to how many people or consumers are affected.

When you talk about IP or other types of data, there’s just almost no correlation. How do you count a single stolen document as a record? Do you count megabytes? Do you count documents?

Those things have highly varied value depending on all kinds of circumstances. It really falls down there.

What Boards Care About

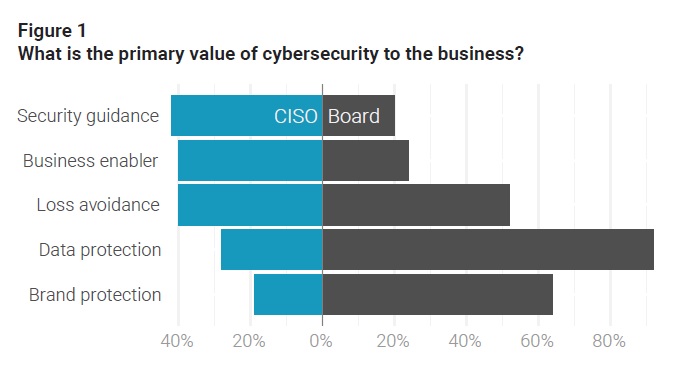

IOS: I just want to get back to your O’Reilly talk. And one of the things that also resonated with me was the disconnect between the board and the CISOs who have to explain investments. And you talk about that disconnect.

I was looking at your blog and Cyber Balance Sheet reports, and you gave some examples of this — something that the CISO thinks is important, the board is just saying, ‘What?’

So I was wondering if you can mention one or two examples that would give some indication of this gap?

WB: The CISOs have been going to the board probably for several rounds now, maybe years, presenting information, asking for more budgets, and the board is trying to ‘get’ what they need to build a program to do the right things.

Pretty soon, many boards start asking, ‘When are we done? We spent money on security last month. Why are we doing it this quarter too?’

Security as a continual and sometimes increasing investment is different than a lot of other things that they look at. They think of, ‘Okay, we’re going to spend money on this project, get it done, and we’re going to have this value at the end of that.’

We can understand those things, but security is just not like that. I’ve seen it a lot this breaking down with CISOs, who are coming from, ‘We need to do this project.’

You lay on top of all this that the board is not necessarily going to see the fruits of their investment in security! Because if it works, they don’t see anything bad at all.

Another problem that CISOs have is ‘how do I go to them when we haven’t had any bad things happen, and asking for more money?’ It’s just a conversation where you should be prepared to say why that is — connect these things to the business.

By doing these things, we’re enabling these pieces of the business to function properly. It’s a big problem, especially for more traditional boards that are clearly focused on driving revenue and other areas of the business.

IOS: Right. I’m just thinking out loud now … Is the board comparing it to physical security, where I’m assuming you make this initial investment in equipment, cameras, and recording and whatever, and then your costs, going forward, are mostly people or labor costs?

They probably are looking at it and saying, ‘Why am I spending more? Why am I buying more cameras or more modern equipment?’

WB: I think so! I’ve never done physical security, other than as a sideline to information security. Even if there are continuing costs, they live in that physical world. They can understand why, ‘Okay, we had a break-in last month, so we need to, I don’t know, add a guard gate or something like that.’ They get why and how that would help.

Whereas in the logical or cyber security world, they sometimes really don’t understand what you’re proposing, why it would work. If you don’t have their trust, they really start trying to poke holes. Then if you’re not ready to answer the question, things just kind of go downhill from there.

They’re not going to believe that the thing you’re proposing is actually going to fix the problem. That’s a challenge.

IOS: I remember you mentioning during your O’Reilly talk that helpful metaphors can be useful, but it has to be the right metaphor.

WB: Right.

IOS: I mean, getting back to the DBIR. In the last couple of years, there was an uptick in phishing. I think probably this should enter some of these conversations because it’s such an easy way for someone to get inside. For us at Varonis, we’re been focused on ransomware lately, and there’s also DDoS attacks as well.

Will these new attack shift the board’s attention to something they can really understand—-since these attacks actually disrupt operations?

WB: I think it can because things like ransomware and DDoS, are things that are apparent just kind of in and of themselves. If they transpire, then it becomes obvious and there are bad outcomes.

Whereas more cloak-and dagger stealing of intellectual property or siphoning a bunch of consumer data is not going to become apparent, or if it is, it’s months down the road, like we talked about earlier.

I think these things are attention-getters within a company, attention-getters from the headlines. I mean, from what I’ve heard over the past year, as this ransomware has been steadily increasing, it has definitely received the board’s attention!

I think it is a good hook to get in there and show them what they’re doing. And ransomware is a good one because it has a corporate aspect and a personal aspect.

You can talk to the board about, ‘Hey, you know, this applies to us as a company, but this is a threat to you in your laptop in your home as well. What about all those pictures that you have? Do you have those things backed up? What if they got on your data at home?’

And then walk through some of the steps and make it real. I think it’s an excellent opportunity for that. It’s not hype, it’s actually occurring and top of the list in many areas!

IOS: This brings something else to mind. Yes, you could consider some of these breaches as a cost of doing business, but if you’re allowing an outsider to get access to all your files, I would think, high-level executives would be a little worried that they could find their emails. ‘Well, if they can get in and steal credit cards, then they can also get into my laptop.’

I would think that alone would get them curious!

WB: To be honest, I have found that most of the board members that I talk to, they are aware of security issues and breaches much more than they were five to ten years ago. That’s a good thing!

They might sit on boards of other companies, and we’ve had lots of reporting of the chance that a board member has been with a company that’s experienced a breach or knows a buddy who has, is pretty good by now. So it’s a real problem in their mind!

But I think the issue, again, is how do you justify to them that the security program is making that less likely? And many of them are terrified of data breaches, to be honest.

Going back to that Cyber Balance Sheet report, I was surprised when we asked board members what is the biggest value that security provides — you know, kind of the inverse of your biggest fear? They all said preventing data breaches. And I would have thought they’d say, ‘Protect the brand,’ or ‘Drive down risk,’ or something like that. But they answered, ‘Prevent data breaches.’

It just shows you what’s at the top of their minds! They’re fearful of that and they don’t want that to happen. They just don’t have a high degree of trust that the security program will actually prevent them.

IOS: I have to say, when I first started at Varonis, some of these data breach stories were not making the front page of The New York Times or The Washington Post, and that certainly has changed. You can begin to understand the fear. Getting back to something you said earlier about how simple approaches, or as we call it block-and-tackle, can prevent breaches.

Another way to mitigate the risk of these breaches is something that you’ve probably heard of, Privacy by Design, or Security by Design. One of the principles is just simply reduce the data that can cause the risk.

Don’t collect as much, don’t store as much, and delete it when it’s no longer used. Is that a good argument to the board?

WB: I do, and I think there are several approaches. I’ve given this recommendation fairly regularly, to be honest: minimize the data that you’re collecting. Because I think a lot of companies don’t need as much data as they’re collecting! It’s just easy and cheap to collect it these days, so why not?

Helping organizations understand that it is a risk decision! Tthat’s not just a cost decision. It is important. And then of what you collect, how long do you retain it?

Because the longer you retain it and the more you collect, you’re sitting on a mountain of data and you can become a target of criminals just through that fact.

For the data that you do have and you do need to retain … I’m a big fan of trying to consolidate it and not let it spread around the environment.

One of the metrics I like to propose is, ‘Okay, here’s the data that’s important to me. We need to protect it.’ Ask people where that lives or how many systems that should be stored on in the environment, and then go look for it.

If you can multiply that number by like 3 or 5 or 10 sometimes. And that’s the real answer! It’s a good metric to strive for: the number of target systems that that information should reside within. many breaches come from areas where that should not have been.

Security Risk Metrics

IOS: That leads to the next question about risk metrics. One we use at Varonis is PII data that has Windows permissions marked for Everyone. They’re always surprised during assessments when they see how large it is.

This relates to stale data. It could be, you know, PII data that hasn’t been touched in a while. It’s sitting there, as you mentioned. No one’s looking at it, except the hackers who will get in and find it!

Are there other good risk metrics specifically related to data?

WB: Yup, I like those. You mentioned phishing a while ago. I like stats such as the number of employees that will click-through, say, if you do a phishing test in the organization. I think that’s always kind of an eye-opening one because boards and others can realize that, ‘Oh, okay. That means we got a lot of people clicking, and there’s really no way we can get around that, so that forces us to do something else.’

I’m a fan of measuring things like number of systems compromised in any given time, and then the time that it takes to clean those up and drive those two metrics down, with a very focused effort over time, to minimize them. You mentioned people that have…or data that has Everyone access.

IOS: Yes.

WB: I always like to know, whether it’s a system or an environment or a scope, how many people have admin access! Because we highly over-privileged in most security environments.

I’ve seen eyes pop, where people say, ‘What? We can’t possibly have that many people that have that level of need to know on…for that kind of thing.’ So, yeah, that’s a few off the top of my head.

IOS: Back to phishing. I interviewed Zinaida Benenson a couple months ago — she presented at Black Hat. She did some interesting research on phishing and click rates. Now, it’s true that she looked at college students, but the rates were astonishing. It was something like 40% were clicking on obvious junk links in Facebook messages and about 20% in email spam.

She really feels that someone will click and it’s just almost impossible to prevent that in an organization. Maybe as you get a little older, you won’t click as much, but they will click.

WB: I’ve measured click rates at about 23%, 25%. So 20% to 25% in organizations. And not only in organizations, but organizations that paid to have phishing trials done. So I got that data from, you know, a company that provides us phishing tests.

You would think these would be the organizations that say, ‘Hey, we have a problem, I’m aware. I’m going to the doctor.’ Even among those, where one in four are clicking. By the time an attacker sends 10 emails within the organization, there’s like a 99% rate that someone is going to click.

IOS: She had some interesting things to say about curiosity and feeling bold. Some people, when they’re in a good mood, they’ll click more.

I have one more question on my list … about whether data breaches are a cost of business or are being treated as a cost of business.

WB: That’s a good one.

IOS: I had given an example of shrinkage in retail as a cost of business. Retailers just always assume that, say, there’s a 5% shrinkage. Or is security treated — I hope it will be treated — differently?

WB: As far as I can tell, we do not treat it like that. But I’ll be honest, I think treating it a little bit like that might not be a bad thing! In other words, there have been some studies that look at the losses due to breaches and incidents versus losses like shrinkage and other things that are just very, very common, and therefore we’re not as fearful of them.

Shrinkage takes many, many more…I can’t remember what the…but it was a couple orders of magnitude more, you know, for a typical retailer than data breaches.

We’re much more fearful of breaches, even at the board level. And I think that’s because they’re not as well understood and they’re a little bit newer and we haven’t been dealing with it.

When you’re going to have certain losses like that and they’re fairly well measured, you can draw a distribution around them and say that I’m 95% confident that my losses are going be within this limit.

Then that gives you something definite to work with, and you can move on. I do wish we could get there with security, where we figure out that, ‘All right, I am prepared to lose this much.”

Yes, we may have a horrifying event that takes us out of that, and I don’t want to have that. We can handle this, and we handle that through these ways. I think that’s an important maturity thing that we need to get to. We just don’t have the data to get there quite yet.

IOS: I hear what you’re saying. But there’s just something about security and privacy that may be a little bit different …

WB: There is. There certainly is! The fact that security has externalities where it’s not just affecting my company like shrinkage. I can absorb those dollars. But my failures may affect other people, my partners, consumers and if you’re in critical infrastructure, society. I mean that makes a huge difference!

IOS: Wade, this has been an incredible discussion on topics that don’t get as much attention as they should.

Thanks for your insights.

WB: Thanks Andy. Enjoyed it!

What should I do now?

Below are three ways you can continue your journey to reduce data risk at your company:

Schedule a demo with us to see Varonis in action. We'll personalize the session to your org's data security needs and answer any questions.

See a sample of our Data Risk Assessment and learn the risks that could be lingering in your environment. Varonis' DRA is completely free and offers a clear path to automated remediation.

Follow us on LinkedIn, YouTube, and X (Twitter) for bite-sized insights on all things data security, including DSPM, threat detection, AI security, and more.