During his keynote speech at RSA Conference 2024, Matt Radolec, Vice President of Incident Response and Cloud Operations at Varonis, described a scenario that is becoming increasingly common in the gen AI era:

An organization came to Varonis with a mystery. Their competitor had somehow gained access to sensitive account information and was using that data to target the organization’s customers with ad campaigns.

The organization had no idea how the data was obtained; this was a security nightmare that could jeopardize their customers’ confidence and trust.

Working with Varonis, the company identified the source of the data breach. A former employee used a gen AI copilot to access an internal database full of account data. Then, they copied sensitive details, like customer spend and product usage and took it with them to a competitor.

Watch Matt Radolec’s complete discussion on how to prevent AI data breaches at RSA Conference 2024.

This example highlights a growing problem: the broad use of gen AI copilots will inevitably increase data breaches.

According to a recent Gartner survey, the most common AI use cases include generative AI-based applications, like Microsoft 365 Copilot and Salesforce’s Einstein Copilot. While these tools are an excellent way for organizations to increase productivity, they also create significant data security challenges.

In this blog, we’ll explore these challenges and show you how to secure your data in the era of gen AI.

Gen AI’s data risk

Nearly 99% of permissions are unused, and more than half of those permissions are high-risk. Unused and overly permissive data access is always an issue for data security, but gen AI throws fuel on the fire.

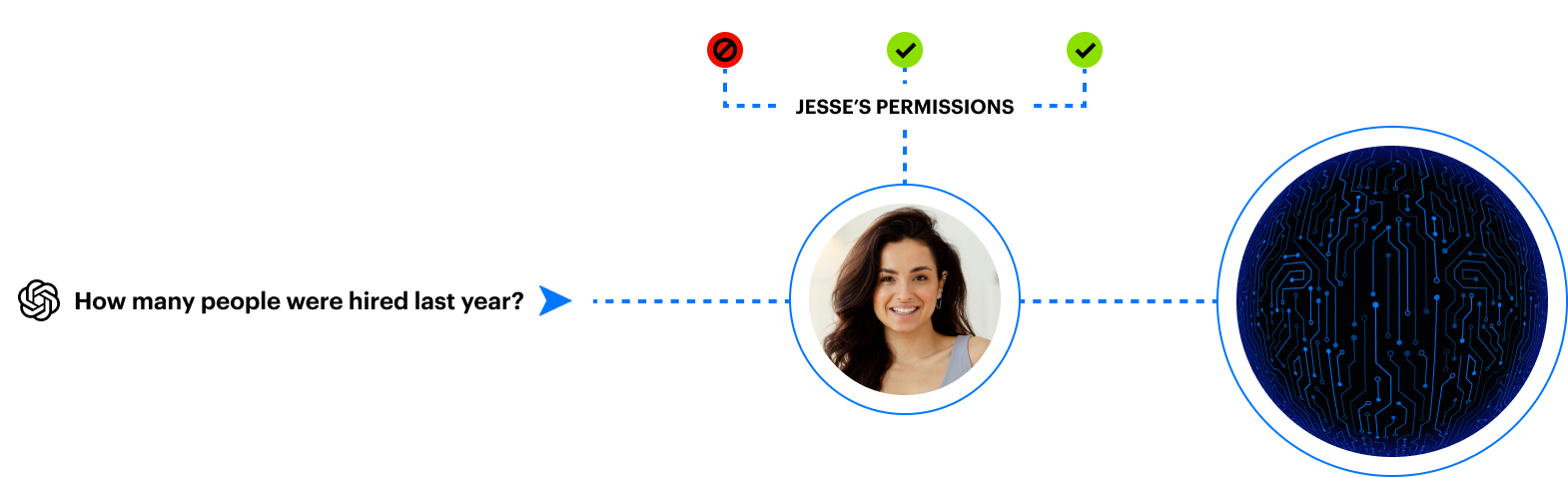

Gen AI tools can access what users can access. Right-sizing access is critical.

Gen AI tools can access what users can access. Right-sizing access is critical.

When a user asks a gen AI copilot a question, the tool formulates a natural-language answer based on internet and business content via graph technology.

Because users often have overly permissive data access, the copilot can easily surface sensitive data — even if the user didn’t realize they had access to it. Many organizations don’t know what sensitive data they have in the first place, and right-sizing access is nearly impossible to do manually.

Gen AI lowers the bar on data breaches.

Threat actors no longer need to know how to hack a system or understand the ins and outs of your environment. They can simply ask a copilot for sensitive information or credentials that allow them to move laterally through the environment.

Security challenges that come with enabling gen AI tools include:

- Employees have access to far too much data

- Sensitive data is often not labeled or mislabeled

- Insiders can quickly find and exfiltrate data using natural language

- Attackers can discover secrets for privilege escalation and lateral movement

- Right-sizing access is impossible to do manually

- Generative AI can create new sensitive data rapidly

These data security challenges aren’t new, but they are highly exploitable, given the speed and ease at which gen AI can surface information.

How to stop your first AI breach from happening

The first step in removing the risks associated with gen AI is to ensure that your house is in order.

It’s a bad idea to let copilots loose in your organization if you’re not confident that you know where you have sensitive data, what that sensitive data is, cannot analyze exposure and risks, and cannot close security gaps and fix misconfigurations efficiently.

Once you have a handle on data security in your environment and the right processes are in place, you are ready to roll out a copilot. At this point, you should focus on three areas: permissions, labels, and human activity.

- Permissions: Ensure that your users' permissions are right-sized and that the copilot’s access reflects those permissions.

- Labels: Once you understand what sensitive data you have and what that sensitive data is, you can apply labels to it to enforce DLP.

- Human activity: It is essential to monitor how the copilot is used and review any suspicious behavior detected. Monitoring prompts and the files accessed is crucial to prevent exploited copilots.

Incorporating these three areas of data security isn’t easy and usually can’t be accomplished with manual effort alone. Few organizations can safely adopt gen AI copilots without a holistic approach to data security and specific controls for the copilots themselves.

Prevent AI breaches with Varonis.

Varonis has focused on securing data for nearly 20 years, helping more than 8,000 customers worldwide protect what matters most.

We have applied our deep expertise to protect organizations planning to implement generative AI.

If you’re just beginning your gen AI journey, the best way to start is with our free Data Risk Assessment.

In less than 24 hours, you'll have a real-time view of your sensitive data risk to determine whether you can safely adopt a gen AI copilot. Varonis is also the industry’s first data security solution for Microsoft 365 Copilot and has a wide range of AI security capabilities for other copilots, LLMs, and gen AI tools.

To learn more, explore our AI security resources.

What should I do now?

Below are three ways you can continue your journey to reduce data risk at your company:

Schedule a demo with us to see Varonis in action. We'll personalize the session to your org's data security needs and answer any questions.

See a sample of our Data Risk Assessment and learn the risks that could be lingering in your environment. Varonis' DRA is completely free and offers a clear path to automated remediation.

Follow us on LinkedIn, YouTube, and X (Twitter) for bite-sized insights on all things data security, including DSPM, threat detection, AI security, and more.

-1.png)